A device-and-host vector abstraction layer. More...

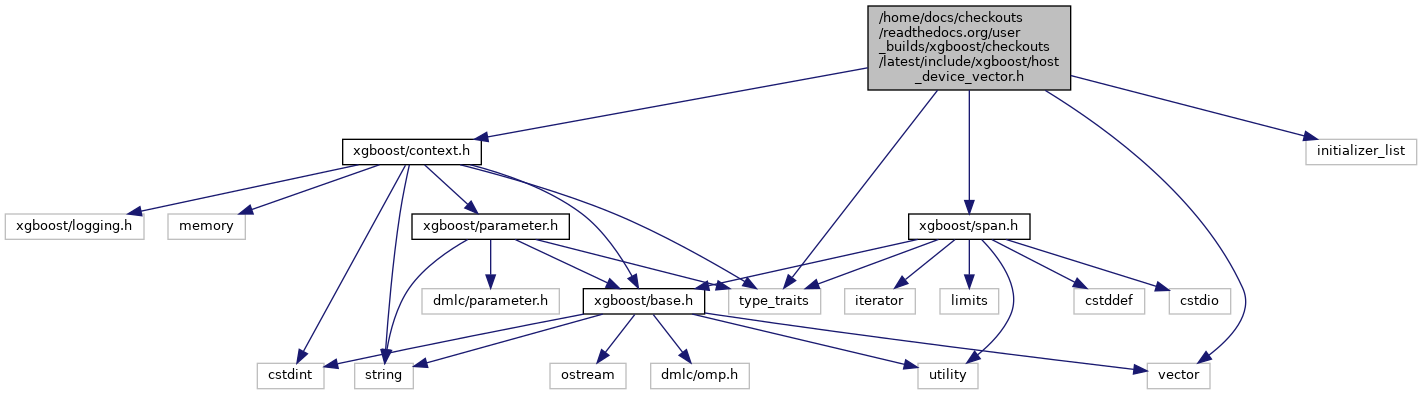

#include <xgboost/context.h>#include <xgboost/span.h>#include <initializer_list>#include <type_traits>#include <vector>

Go to the source code of this file.

Classes | |

| class | xgboost::HostDeviceVector< T > |

Namespaces | |

| xgboost | |

| Core data structure for multi-target trees. | |

Enumerations | |

| enum | xgboost::GPUAccess { xgboost::kNone , xgboost::kRead , xgboost::kWrite } |

| Controls data access from the GPU. More... | |

Detailed Description

A device-and-host vector abstraction layer.

Copyright 2017-2019 XGBoost contributors

Why HostDeviceVector?

With CUDA, one has to explicitly manage memory through 'cudaMemcpy' calls. This wrapper class hides this management from the users, thereby making it easy to integrate GPU/CPU usage under a single interface.

Initialization/Allocation:

One can choose to initialize the vector on CPU or GPU during constructor. (use the 'devices' argument) Or, can choose to use the 'Resize' method to allocate/resize memory explicitly, and use the 'SetDevice' method to specify the device.

Accessing underlying data:

Use 'HostVector' method to explicitly query for the underlying std::vector. If you need the raw device pointer, use the 'DevicePointer' method. For perf implications of these calls, see below.

Accessing underling data and their perf implications:

There are 4 scenarios to be considered here: HostVector and data on CPU --> no problems, std::vector returned immediately HostVector but data on GPU --> this causes a cudaMemcpy to be issued internally. subsequent calls to HostVector, will NOT incur this penalty. (assuming 'DevicePointer' is not called in between) DevicePointer but data on CPU --> this causes a cudaMemcpy to be issued internally. subsequent calls to DevicePointer, will NOT incur this penalty. (assuming 'HostVector' is not called in between) DevicePointer and data on GPU --> no problems, the device ptr will be returned immediately.

What if xgboost is compiled without CUDA?

In that case, there's a special implementation which always falls-back to working with std::vector. This logic can be found in host_device_vector.cc

Why not consider CUDA unified memory?

We did consider. However, it poses complications if we need to support both compiling with and without CUDA toolkit. It was easier to have 'HostDeviceVector' with a special-case implementation in host_device_vector.cc

- Note

- : Size and Devices methods are thread-safe.